Additional Compute Services

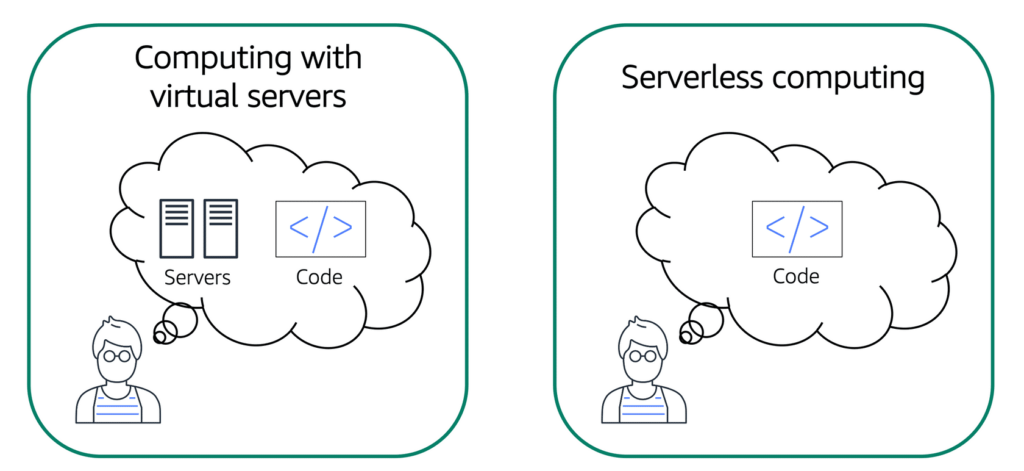

Serverless computing

Amazon Elastic Compute Cloud (EC2) lets running the virtual servers in the cloud by following the sequence :

- Provision instances (virtual servers)

- Upload the code

- Continue to manage the instances while application is running

But there is an alternative – Serverless Computing :

The term “serverless” means that code runs on servers, but company does not need to provision or manage these servers. With serverless computing, people can focus more on innovating new products and features instead of maintaining servers.

Another benefit of serverless computing is the flexibility to scale serverless applications automatically. Serverless computing can adjust the applications’ capacity by modifying the units of consumptions, such as throughput and memory.

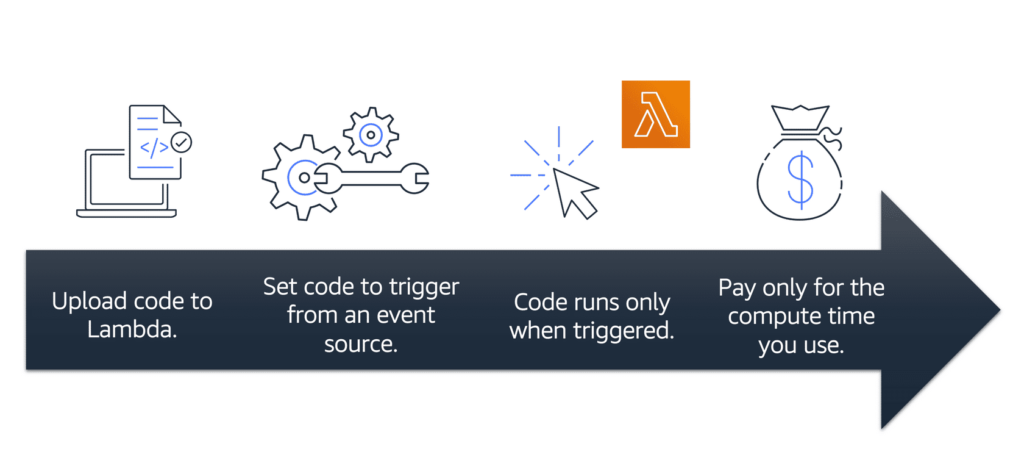

An AWS service for serverless computing is AWS Lambda. While using AWS Lambda, company pays only for the compute time consumed. Charges apply only when the code is running. Code can also be run for virtually any type of application or backend service, all with zero administration.

For example, a simple Lambda function might involve automatically resizing uploaded images to the AWS Cloud. In this case, the function triggers when uploading a new image.

Lambda AWS works in the following way :

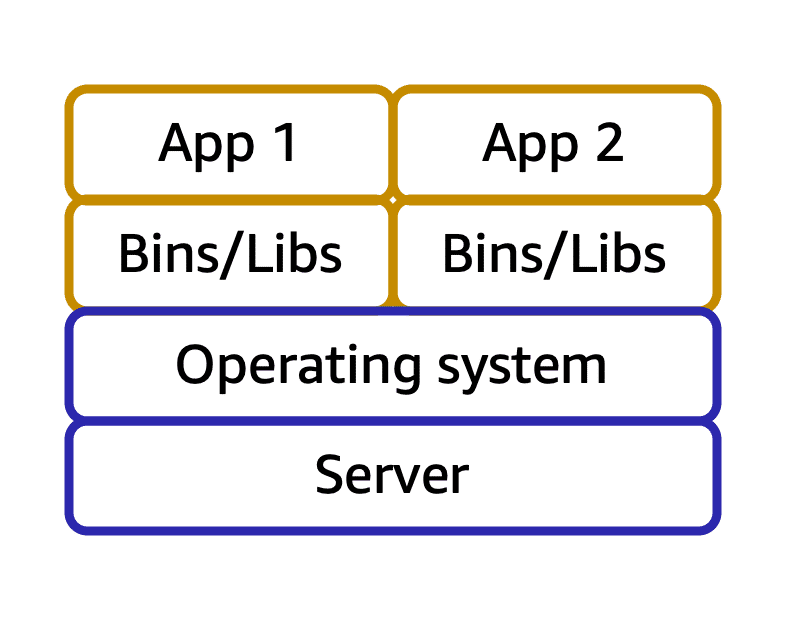

Containers

Containers provide a standard way to package application’s code and dependencies into a single object. Containers can also be used for processes and workflows in which there are essential requirements for security, reliability, and scalability.

How containers work :

Suppose there is an application developer that wants to ensure that the application’s environment remains consistent regardless of deployment. This helps to reduce time spent debugging applications and diagnosing differences in computing environments.

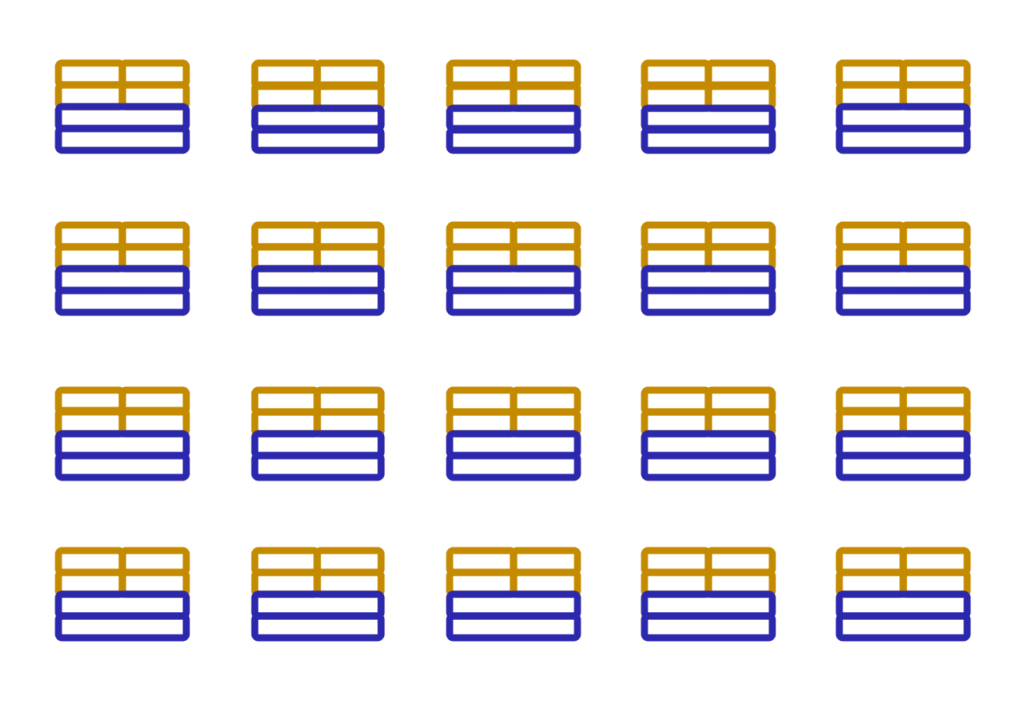

When running containerized applications, it’s important to consider scalability. Suppose that instead of a single host with multiple containers, developer has to manage tens of hosts with hundreds of containers. Alternatively, developer has to manage possibly hundreds of hosts with thousands of containers. At a large scale, imagine how much time it might take for developer to monitor memory usage, security, logging, and so on.