How Does Hadoop Work ?

Hadoop runs code across a cluster of computers. This process includes the following core tasks that Hadoop performs :

- Data is initially divided into directories and files. Files are divided into uniform sized blocks of 128M and 64M (preferably 128M)

- These files are then distributed across various cluster nodes for further processing

- HDFS, being on top of the local file system, supervises the processing

- Blocks are replicated for handling hardware failure

- Checking that the code was executed successfully

- Performing the sort that takes place between the map and reduce stages

- Sending the sorted data to a certain computer

- Writing the debugging logs for each job

Advantages of Hadoop

- Hadoop framework allows the user to quickly write and test distributed systems. It is efficient, and it automatically distributes the data and work across the machines and in turn, utilizes the underlying parallelism of the CPU cores

- Hadoop does not rely on hardware to provide fault-tolerance and high availability (FTHA), rather Hadoop library itself has been designed to detect and handle failures at the application layer

- Servers can be added or removed from the cluster dynamically and Hadoop continues to operate without interruption

- Another big advantage of Hadoop is that apart from being open source, it is compatible on all the platforms since it is Java based

HDFS

Hadoop File System was developed using distributed file system design. It is run on commodity hardware. Unlike other distributed systems, HDFS is highly fault tolerant and designed using low-cost hardware.

HDFS holds large amount of data and provides easier access. To store such huge data, the files are stored across multiple machines. These files are stored in redundant fashion to rescue the system from possible data losses in case of failure. HDFS also makes applications available to parallel processing.

Features of HDFS are :

- It is suitable for the distributed storage and processing

- Hadoop provides a command interface to interact with HDFS

- The built-in servers of namenode and datanode help users to easily check the status of cluster

- Streaming access to file system data

- HDFS provides file permissions and authentication

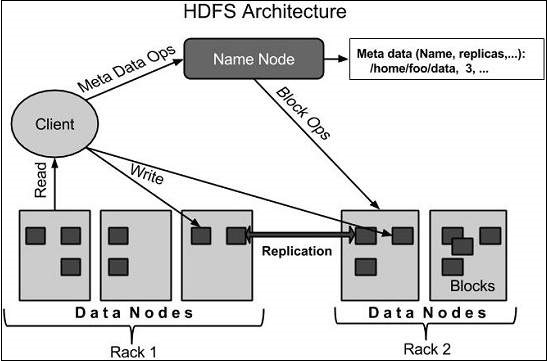

Given below is the architecture of a Hadoop File System :

HDFS follows master-slave architecture and it has the following elements :

Namenode – it is the commodity hardware that contains the GNU/Linux operating system and the namenode software. It is a software that can be run on commodity hardware. The system having the namenode acts as the master server and does the following tasks :

- Manages the file system namespace

- Regulates client’s access to files

- It also executes file system operations such as renaming, closing and opening files and directories

Datanode – it is a commodity hardware having the GNU/Linux operating system and datanode software. For every node (Commodity hardware/System) in a cluster there will be a datanode. These nodes manage the data storage of their system :

- Datanodes perform read-write operations on the file systems, as per client request

- Datanodes perform operations such as block creation, deletion and replication according to the instructions of the namemode

Block – generally the user data is stored in the file of HDFS. The file in a file system will be divided into one or more segments and/or stored in individual data nodes. These file segments are called as blocks. In other words, the minimum amount of data that HDFS can read or write is called a Block. The default block size is 64MB, but it can be increased as per the need to change in HDFS configuration

Goals of HDFS are :

- Fault detection and recovery – since HDFS includes a large number of commodity hardware, failure of components is frequent. Therefore HDFS should have mechanisms for quick and automatic fault detection and recovery

- Huge datasets – HDFS should have hundreds of nodes per cluster to manage the applications having huge datasets

- Hardware at data – a requested task can be done efficiently, when the computation takes place near the data. Especially where huge datasets are involved, it reduces the network traffic and increases the throughput